Splitting the Tread - A finer look at auto-parallelization

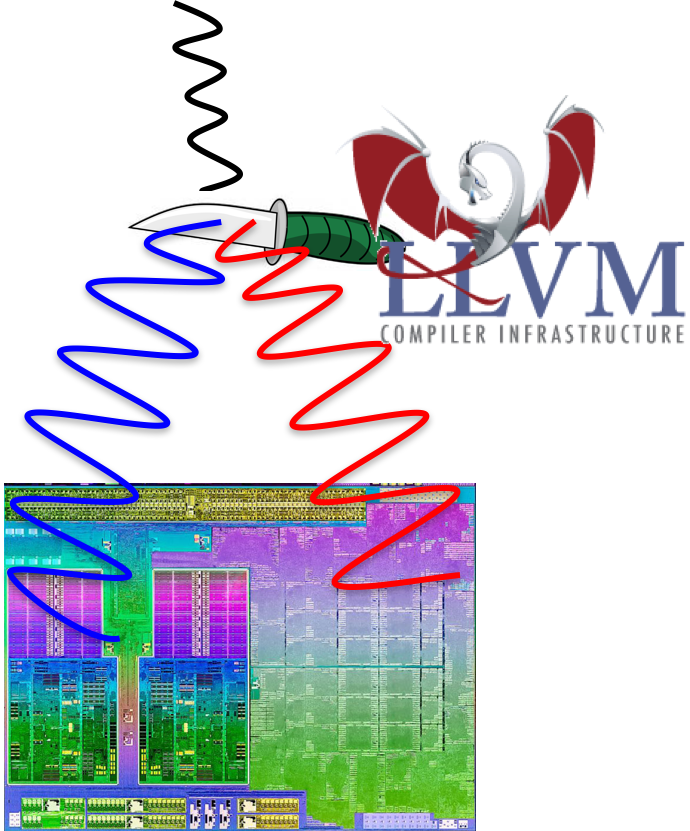

Performance improvements of current CPUs are mainly achieved by offering ever more cores and wider SIMD units. However, it is challenging and time-consuming for programmers to make good use of these parallel resources. Auto-parallelization aims to transfer this effort to the compiler. Current approaches focus on data-level parallelism to distribute identical work packages to homogeneous resources. Future hardware will be increasingly heterogeneous, but still tightly integrated, as demonstrated by AMDs APU architecture integrating x86 CPUs with GPUs, or the Xilinx Zynq architecture combining ARM CPUs with FPGA fabric. We see this as a challenge and opportunity for auto-parallelization.

In this thesis, you will explore new, explicitly heterogeneous approaches to auto-parallelization. For example, one thread could perform memory loads, forward data to a compute thread that in turn supplies data to a store thread. Or one thread could try to cover most of the control decisions and leave regular computations, in particular with floating point operations, to one or several distinct computing threads.

A well-suited tool for this task will be the LLVM compiler infrastructure, with which we have a positive experience from previous projects. It allows to use a working front-end for various source languages and back-end for different CPU architectures, and to integrate individual transformation passes that change the intermediate code according to the new ideas to be explored in this thesis.

Tasks

- Exploration of program patterns that allow heterogeneous multi-threading

- Implementation of LLVM passes that split programs according to such patterns

- Analysis of performance implications

Recommended Skills

- Solid programming background (C++)

- Interest in compiler technology