HPC Datacenter (Building X)

The HPC data center in Building X was handed over to PC2 for its intended purpose after a two year construction phase in November 2021. This marked a major milestone and step forward for PC2 and Paderborn University as a whole. The planning and constrution of the data center has been funded by the federal government and the state of North Rhine-Westphalia.

The official handover of the Noctua 2 HPC system took place during the festive opening ceremony in April 2022 in the presence of the NRW Minister for Culture and Science Isabel Pfeiffer-Poensgen and the representative of the NRW Bau- und Liegenschaftbehörde BLB Dinah Heidemann.

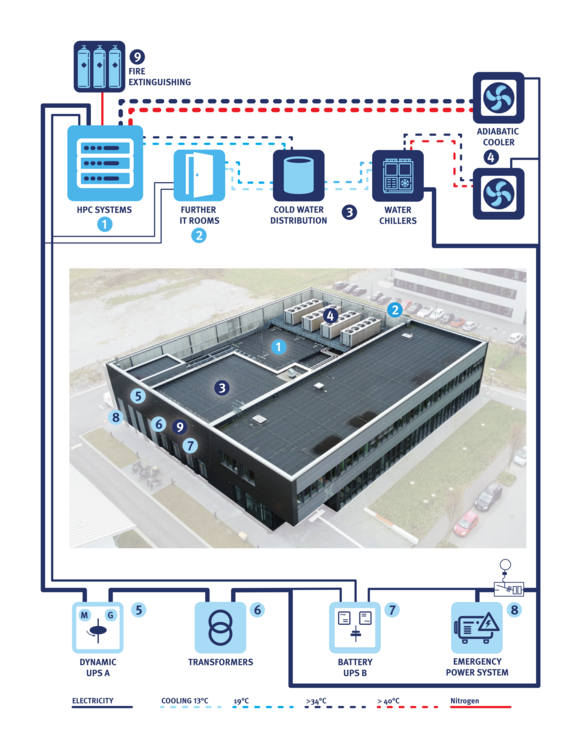

The HPC systems are located in a computer room with a floor area of over 330 m², 5 m freely usable ceiling height and 1 m deep raised floor.

The computer room is divided into three segments. Each segment can accommodate one large HPC system with its specific requirements for electricity and cooling.

Power to the HPC systems is provided by a busbar systems and ceiling-mounted outlet boxes, each with a maximum capacity of over 1 megawatt per HPC system. An electrical capacity of 2.6 megawatts is currently available for IT systems, and expansion to over 6 megawatts is being planned.

The piping for the two cooling circuits, a cold-water circuit with an inlet temperature of 19°C and a warm water circuit with an inlet temperature of >32°C are located in the raised floor. The warm water loop can discharge up to 1 megawatt per segment (1.4 megawatt planned), depending on the inlet and outlet temperatures allowed by the HPC system. The chilled water loop has a total capacity of up to 700 kW and also supplies the chillers for air cooling (1 megawatt is planned). An early fire detection system and fire detection sensors provide for alarming and, if necessary, extinguishing by introducing nitrogen.

The cooling systems of the computer center were designed in such a way that the waste heat of the computer systems can be dissipated and reused in a highly efficient manner. To this end, we consistently rely on warm water cooling, which can dissipate at least 85% (typically 95%) of the waste heat. In order to be able to achieve a flow temperature of 32°C for the warm water cooling circuit all year round, the heat exchangers on the roof can be moistened with water on particularly hot days. The humidification generates evaporative cooling and leads to a reduction of the temperature supplied by the heat exchanger, the so-called adiabatic cooling.

Due to the high temperature in the return of the warm water circuit, the waste heat can be used to heat buildings, for which a local heating network is being built on the university campus. The planned new campus buildings in the immediate vicinity of the computer center will be the first to benefit from this.

Only a maximum of 15% (typically 5%) of the waste heat will be dissipated in the traditional way via air cooling, which is much more energy-intensive due to the generation of cold by compression chillers and distribution by fans. The compressors are only required if the outside air temperature is above 8°C, otherwise free cooling can also be used.

Fail-safe operation requires a specially designed power supply for the IT systems and the operation-critical technical systems. Therefore, we always use two separate power supply lines for the core components of the HPC system which include the data storage systems, server systems for administrative purposes and the core switches of the networks. In data center X, this concept is ensured by a power supply line A protected by a dynamic UPS system (line filter) and power supply line B protected by a battery-backed uninterruptible power supply.

In the event of a prolonged failure of the mains supply, the battery UPS line B is supplied with power via an emergency power system with a diesel engine.

This setup is technically complex and rather maintenance-intensive due to the batteries used and not ideal from the sustainability perspective because of the increased energy consumption.

To counteract this disadvantage, power supply line B is rated at 400 kW, the minimum size required for this function. Supply line A thus not only represents the second foot of supply for the most important IT components, but is also the only and thus central supply path for the large number of computing nodes.

In the mains filter system, power is regenerated by a motor-generator combination, filtering out disturbances / fluctuations that may enter the building through the mains. A rotating flywheel in the system stores enough energy to bridge power outages for at least half a minute. Supply line A is designed for an output of over 2,000 kW (up to 6,000kW is planned), so the high efficiency of the mains filter system benefits the entire data center.

Operation-critical technical systems such as pumps, fans of the air-circulation cooling units, chillers, etc. are only protected via the emergency power system, since this is available after a short downtime of just a few seconds and the systems can easily tolerate this brief interruption.

The existing computer rooms of PC2 in data center O together with data center X provide cross-site geo-redundancy. There are more than 1,000 communication lines between the network rooms of the two data centers, which were designed as fiber optic and copper cables and laid over two separate cable routes. The central storage system is distributed across both sites so that if one site fails, the unaffected site can continue to provide access to critical user data. This avoids service interruptions and data loss.

The efficient operation of HPC systems is an important criterion for us and is also required by the legislator in the current and future requirements for the sustainability of data centers.

The Noctua 2 HPC system features direct liquid cooling (DLC) for high coolant temperatures and thus makes optimum use of the efficiency potential of the indirect free cooling of the data center.

Power Usage Effectiveness (PUE) is a key figure for estimating the energy efficiency of a data center. The closer the value is to 1.0, the more energy-efficient the data center is and the better its energy balance. We are very pleased that the evaluation of the first months of operation confirm that the PUE value of 1.1 targeted in the planning could be achieved in data center X by using such HPC systems with DLC.

From the preceding considerations, it is already clear that sustainability aspects are of great importance in the planning and operation of the new data center. We are therefore striving to have our data center certified with the "Blue Angel" environmental label (DE-UZ 228), which distinguishes data centers that are operated in an energy-efficient and environmentally friendly manner. As part of a study sponsored by the German Federal Environmental Agency and consulting by a specialized consulting firm, we are examining whether the criteria for the eco-label can already be met or what deficits and potentials exist.

The most important requirement categories are:

- a particularly energy-efficient, climate-friendly and resource-saving operation of the technical building equipment.

- the development and implementation of a long-term strategy to increase energy and resource efficiency

- empowering users to implement their own measures to increase energy efficiency

- the implementation of operational monitoring and transparent reporting to ensure energy-efficient operation

An interim report on Blue Angel certification study from a specialized consulting firm, sponsored by the German Federal Environmental Agency, is available. The monitoring and energy-efficient operations of the facility and computer systems is already well established. The Blue Angel certification is being prepared as part of the infrastructure expansion, which is scheduled for completion in 2026.

In the first few months of operation, dozens of groups of visitors have already been guided through the data center.

In addition to interested employees and students from the University of Paderborn, numerous scientists, operators and planners of data centers from all over Germany learned about the underlying concepts and were able to visit the technical facilities.

Regular tours are offered, and individual tours can also be arranged upon request.